Did you ever wondered, how a search engine works? In this guide, we are going to explain what is the aim of a search engine algorithm and how that influence the results of a search engine results page. Also, we are covering the concepts of crawling and indexing, along with crawl budget and how this can affect your business site.

Search engines work by discovering (crawling) hundreds of million of pages using their own web crawlers. These are referred to by SEO specialists or engineers as search engine bots or spiders. A search engine navigates the web by downloading web pages and following links on these pages to discover new pages that have been made available.

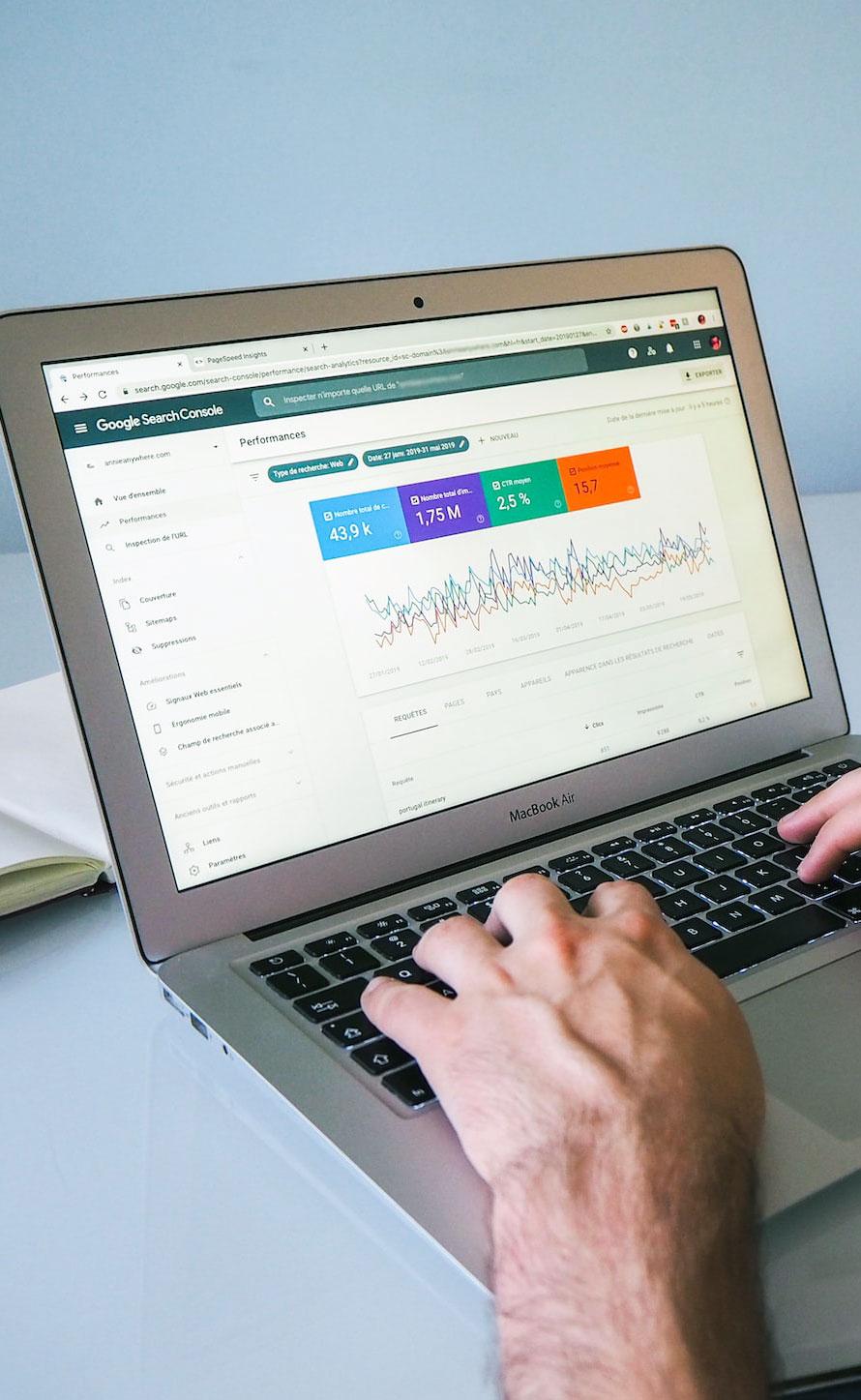

In Google case, they provide you with a tool called Google Search Console where you are helping their crawler to discover your newest web pages by submitting a sitemap or by requesting indexing to the your latest pages.

This aim is to inform the Google crawl about their exists, doesn’t necessarily means they will crawl and index the page immediately. Sometime, you will notice that your submitted pages have been crawled but not indexed.

This can happen for a series of reasons, such as duplicate content, thin or low quality content; in some cases can be directive on the webpages, such as having a noindex tag or an exclusion within the site robots.txt file; however, discussing these reasons in detail should be a topic for another post.

What is the search engine index?

Webpages that have been discovered by a search engine are added into a data structure called an index. The index includes all the discovered URLs along with a number of relevant signals about the content of each URL such as:

- the key terms & topics the page content covers

- the type of content that is being crawled

- the freshness of the page

- user engagement of the page and/or domain

- what is the link profile associated with that page and/or domain

- structure of the page and/or domain

- status codes for that page and/or the entire domain

In short, the index is populated by webpages that are aligned with the series of criteria the search engine has been put in place through it algorithm. Basically, is having a library with all these shelves sorted by genre, author, title, type, shape, colour of the book, etc.

What is the aim of a search engine algorithm?

The search engine algorithm’s goal is to return a relevant set of high-quality search results that answer the user’s query/question as rapidly as feasible.

The user then chooses an option from the list of search results, and this action, along with following activity, feeds into future learnings, which might affect future search engine rankings.

What happens when you run a search?

When a user enters a search query into a search engine, all of the pages deemed relevant from the index are identified, and an algorithm is used to hierarchically rank the relevant sites into a set of results.

With each search engine, the algorithms utilised to prioritise the most relevant results varies. For example, a page that ranks highly in Google may not rank highly in Bing for the same query.

In addition to the search query, search engines use other relevant data to return results, including:

- Location – Some search queries are location-dependent e.g. ‘cafes near me’ or ‘movie times’.

- Language detected – Search engines will return results in the language of the user, if it can be detected.

- Previous search history – Search engines will return different results for a query dependent on what user has previously searched for.

- Device – A different set of results may be returned based on the device from which the query was made.

What is search engine indexing?

Indexing is the technique through which search engines organise material prior to a search in order to provide lightning-fast responses to queries.

Search engines would have to crawl individual pages for keywords and subjects in order to find relevant material. Search engines (including Google) instead employ an inverted index, often known as a reverse index

What is an inverted index?

An inverted index is a system that compiles a database of text elements as well as pointers to the documents that contain those components. Then, through a technique known as tokenization, search engines condense words to their basic meaning, lowering the amount of resources required to store and retrieve data.

This method is far more efficient than listing all known papers against all relevant keywords and characters.

The importance of backlinks in how a search engine works

Backlinks are an important part of how search engines determine the significance of a page. Several investigations and tests have been conducted to determine the relationship between backlinks and rankings.

According to Moz’s backlink research, 99.2% of the top 50 Google search queries (15,000 search results) had at least one external backlink. As we’ve mentioned at the beginning of this article, the index uses the backlinks to understand the relevancy and freshness of a page content and where is going to go in he SERP.

What is crawl budget?

Crawl budget is the number of URLs on a website that a search engine will crawl in a given time period and a function of crawl rate and crawl demand. However, the crawl budget is constrained in order to ensure that a website’s server is not overloaded with too many requests.

Crawl rate is defined as the number of URLs per second that search engines will attempt to crawl a site. This is normally proportional to the number of active HTTP connections that they choose to open simultaneously.

Crawl rate limit can be defined as the maximum fetching that can be achieved without degrading the experience of visitors to a site.

So, why is the crawl budget important in how a search engine works? Well, is simple. Each website, depending on various criteria, starting from web hosting servers to the size of your website pages, will have a certain amount of budget allocated by the respective search engine crawl. So, is high improbable that all your website pages to be crawled in one go and processed.

In conclusion, search engines are powerful tools that assist consumers in discovering relevant web material, especially when 51% of consumer are using a search to learn about other peoples experience with that product or service.

Web crawlers are employed by search engines to discover and index online pages, and an algorithm is used to rank the relevant sites in a collection of results in a hierarchical order. The search engine algorithm’s goal is to return a relevant collection of high-quality search results that respond to the user’s query/question as quickly as possible.

Backlinks, content quality and page size are essential variables in how search engines judge the importance of a page and how frequently they crawl a website. Knowing the fundamentals of how search engines operate can assist businesses in optimising their websites for increased visibility and search engine rankings.

If you have any struggle into understanding why is important to know how a search engine works and how this can help you improve your business online presence, please feel free to contact us. We are more than happy to assist you and to give our advice.